Marietta is a small town home to around 13,000 residents nestled along the Ohio River. It boasts charming independent stores and captivating museums dedicated to regional heritage, as well as stunning vistas of the nearby Appalachian foothills. It has been roughly one year since my last visit there, which means I should plan another trip soon. I inquired.

ChatGPT

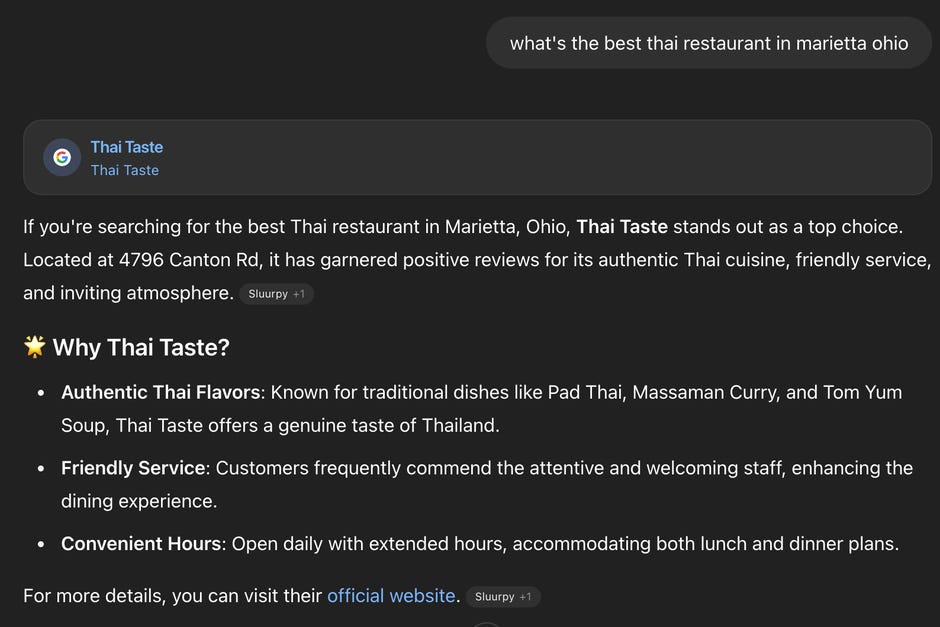

For a restaurant suggestion — the top Thai spot in town.

chatbot

required: Thai cuisine. However, the issue is that this restaurant is located in Marietta, Georgia. There isn’t a Thai restaurant in the city of Ohio.

In a discussion with Katy Pearce, who is an associate professor at the University of Washington and part of the UW Center for an Informed Public, she casually mentioned the query about which Thai place serves the best food in our small hometown. This instance isn’t particularly significant; Marietta, Ohio has several good dining spots, including one Thai restaurant located near Parkersburg, West Virginia. However, her point highlights a crucial issue—when relying on an AI chatbot like a search tool, you can receive misleadingly confident responses even when they’re not helpful. Similar to how golden retrievers often try to make their owners happy, these bots aim to satisfy user requests regardless of accuracy, noted Pearce.

Large language models (LLMs), such as OpenAI’s ChatGPT and Anthropic’s

Claude

and Google’s

Gemini

are increasingly becoming go-to sources for finding information about the world. They’re displacing

traditional search engines

as the first, and often only, place many people go when they have a question. Technology companies are

rapidly injecting generative AI

into

search engine results

, summaries in news feeds and other places we get information. That makes it increasingly important that we know how to tell when it’s giving us good information and when it isn’t — and that we treat everything from an AI with the right level of skepticism.

(Disclaimer: The parent company of newsinpo.site, Ziff Davis, filed a lawsuit against OpenAI in April, claiming that OpenAI violated Ziff Davis’ copyright during the development and operation of their AI systems.)

Sometimes, opting for AI over a search engine can be beneficial. Pearce mentioned that she frequently turns to a large language model for minor queries where there is likely sufficient data available online and within the model’s training set to generate a satisfactory response. For instance, restaurant suggestions or simple DIY advice tend to be fairly dependable.

Other situations are more fraught. If you rely on a chatbot for information about your health, your finances, news or politics, those hallucinations could have serious consequences.

“It gives you this authoritative, confident answer, and that is the danger in these things,” said Alex Mahadevan, director of the

MediaWise

media literacy program at the Poynter Institute. “It’s not always right.”

Below are some aspects to watch for along with tips on verifying information when using generative AI.

Why you shouldn’t always rely on artificial intelligence

To grasp why chatbots sometimes provide incorrect information or fabricate details, it’s useful to comprehend their operational mechanisms. These Large Language Models (LLMs) undergo training using vast datasets, enabling them to estimate the likelihood of subsequent content based on incoming inputs. Essentially, they function as “prediction engines,” generating responses according to the statistical probability that these answers will address your questions effectively, as explained by Mahadevan. Instead of verifying factual accuracy, they aim to deliver replies with the highest chance of statistical correctness.

When I requested a Thai restaurant located in a place named Marietta, the AI model failed to locate an establishment meeting my specific requirements within that area—however, it did come up with one in another Marietta situated approximately 600 miles further south. This outcome seems more probable according to the AI’s internal assessments compared to the likelihood of me seeking information about something non-existent.

Thus, Pearce’s issue with his golden retriever. Similar to this beloved family dog breed, the AI tool adheres to its programming: striving to perform optimally to ensure your satisfaction.

When a large language model fails to locate the precise optimal response to your query, it might provide an answer that seems accurate yet is incorrect. Alternatively, it may fabricate information seemingly out of nowhere that looks convincing. This can be quite deceptive. Certain types of these mistakes are referred to as

hallucinations

, they are quite evident. You could readily notice that you should not.

put glue on your pizza

To ensure the cheese adheres properly. Others are subtler, such as when a model generates false references and credits them to genuine authors.

Should the tool lack the necessary information, it generates something novel,” Pearce stated. “This newly created content might be completely inaccurate.

The training data for these models significantly impacts their accuracy. A lot of these extensive systems were trained using almost the entire internet, encompassing both precise, factual details as well as casual comments from online discussion forums.

People also create things from scratch. However, a person can usually share with you the source of their information or explain how they reached their conclusions more consistently. Even when an AI model cites its sources, it may struggle to offer a precise chain of evidence.

How can one determine what to rely on?

Know the stakes

The precision of the output from a chatbot or another generative AI tool might not be crucial, yet it can make all the difference. It’s essential to grasp the consequences of relying on unreliable data when making choices, as noted by Pearce.

“When people are using generative AI tools for getting information that they hope is based in fact-based reality, the first thing that people need to think about is: What are the stakes?” she said.

Looking for some music playlist ideas? No big deal here. If a chatbot comes up with a fake Elton John song, there’s really no reason to stress about it.

However, the risks are greater where your medical treatment is concerned.

financial decisions

or the capacity to obtain reliable news and information about what’s happening globally.

Remember the types of data the models were trained on. For health questions, remember that while training data may have included some medical journals, it might have also been trained on unscientific social media posts and message board threads.

Always fact-check any information that could lead to you making a big decision — think of things that could affect your money or your life. The information behind those decisions requires more scrutiny, and gen AI’s tendency to mix things up or make things up should raise doubts before you act.

“If it’s something that you need to be fact-based, you need to absolutely triple-check it,” Pearce said.

Gen AI changes how you verify information online

Poynter’s MediaWise initiative has long been imparting media literacy skills far earlier than when ChatGPT emerged towards the end of 2022. Prior to the advent of generative artificial intelligence, one crucial aspect was assessing the credibility of the source. As per Mahadevan, if you come across claims on platforms like Facebook or through a Google search stating that a public figure such as a celebrity or politician has passed away, scrutinizing the reliability of the entity disseminating this information can be vital. Is an established news outlet corroborating these reports? It likely holds more weight. Conversely, if the sole confirmation comes from distant connections, such as your cousin’s neighbour’s former spouse, skepticism might be warranted.

Although this guidance is clear, many individuals either disregard it or fail to comprehend it. As Mahadevan pointed out, “People have historically struggled with assessing information found on the internet.”

When it comes to AI, that guidance isn’t as effective as it once was. Chatbots can provide responses devoid of context. When you pose a query, they offer an answer, prompting you to potentially view the AI model as the authority. Unlike with conventional Google searches or social media posts where the originator is usually clearly shown, some chatbots might cite their sources, yet frequently, the response arrives unaccompanied by references.

“Behind this information, we’re not quite sure who’s actually involved,” Mahadevan stated.

Alternatively, you might need to look somewhere else to discover the primary sources of information. Generative AI systems, such as social media platforms, serve merely as channels for disseminating information rather than being original sources themselves. Just as the

who

What lies behind a social media post is important, just as much as the original source of any data you obtain from a chatbot.

Read more:

AI Essentials: 27 Ways to Make Gen AI Work for You, According to Our Experts

How to get the truth (or something closer to it) from AI

The primary method for ensuring accurate online information continues to be the same as it has been: consult several reliable sources.

However, when using generative artificial intelligence, consider these methods to enhance the output quality:

Utilize a resource that offers citations.

A lot of chatbots and general AI models available now include citation features within their replies; however, this may require asking explicitly or enabling certain settings. You can find similar functionality in various applications of AI technology, such as Google’s AI Overviews included in search outcomes.

The presence of citations themselves is good — that response may be more reliable than one without citations — but the model might be making up sources or misconstruing what the source says. Especially if the stakes are high, you probably want to click on the links and make sure the summary you’ve been provided is accurate.

Inquire about the AI’s level of confidence.

You can obtain a more accurate response from a chatbot by formulating your query thoughtfully. A useful technique is to request confidence levels. For instance: “Can you tell me when the original iPhone was released? Please also indicate how sure you are of this information.”

You will still require a critical approach when assessing the response provided. Should the chatbot inform you that the initial iPhone was released in 1066, you would likely remain skeptical regardless of its assuredness level being at 100%.

Mahadevan recommends considering the gap between your chatbot window and the original information source, stating, “It should be approached as though it’s hearsay,” he explained.

Don’t simply pose a hasty query.

Incorporating statements such as “share your degree of certainty,” “include references for your data,” “present differing perspectives,” “rely solely on credible resources,” and “thoroughly scrutinize your facts” can enhance your prompt construction. Pearce emphasized that effective prompts tend to be extensive — at least a paragraph in length, aimed at posing a query.

You can likewise request it to adopt a specific character to obtain responses in the appropriate tone or from the viewpoint you desire.

“When you’re prompting it, adding all these caveats is really important,” she said.

Bring your own data

LLMs may struggle most when pulling information from their training data or from their own searches for information on the internet. But if you provide the documents, they can perform better.

Both Mahadevan and Pearce mentioned that they have achieved positive outcomes using generative AI tools for summarizing or distilling insights from extensive documents or datasets.

Pearse mentioned that when looking for a car, she gave ChatGPT all the details needed—such as PDFs of listings and Carfax reports—and instructed it to concentrate solely on these specific options. The AI delivered an extensive and thorough evaluation of each vehicle. However, it did not suggest additional cars randomly discovered online, something Pearce aimed to prevent.

She mentioned, ‘I had to provide all that details to it.’ She added, ‘If I had simply directed them to check any used car dealer’s website, it wouldn’t have been so detailed.’

Utilize AI as an initial reference

Mahadevan drew parallels between generative AI today and the early perceptions of Wikipedia’s credibility years back. At that time, many doubted whether an openly editable platform could maintain accuracy. However, Wikipedia offers a significant benefit: its references are readily accessible. Starting with a Wikipedia article often leads readers to explore the cited works, providing them with a fuller understanding of their topic. This practice of tracing information backward is termed by Mahadevan as “reading upstream.”

The main point I aim to convey when teaching media literacy is how to become an engaged user of information,” he stated. Even though generative artificial intelligence might isolate you from direct sources, this isn’t inevitable. It simply requires putting in a bit more effort to uncover them.