This year’s

Google I/O developer’s conference

was filled with AI advancements. Google unveiled the newest updates to its Gemini AI platform and launched its expensive new AI Ultra subscription plan (spoiler: it’s

$250 per month

).

Google also introduced its

new Flow app

that enhances its video-generation capabilities, and concluded the presentation with a demonstration of its latest feature

Android XR glasses

.

The company was also proud to announce that AI usage and performance numbers are up. (Given that a new 42.5-exaflop Ironwood Tensor processing unit is coming to Google Cloud later this year, they’ll continue to rise.)

To get a detailed second-by-second summary of the event, visit our site.

stream live updates from Google I/O

.

On May 13, Google hosted a dedicated event focused on Android, where it

launched Android 16

, launching its new Material 3 Expressive interface, enhancements to security measures, and an update on Gemini integration and features.

Many of the exciting new AI features are accessible through one of its subscription tiers. AI Pro is essentially a repackaged version of Google’s $20-a-month Gemini Advanced plan with additional features added on.

Google AI Ultra

It’s a more expensive but newer choice — at $250 per month, currently discounted by half for the initial three months — which grants access to the most recent, top-tier, and least restricted versions of all their tools and models. This also includes a prototype for handling AI agents along with 30 terabytes of storage space needed to accommodate everything. Both options are available starting today.

Google aims to enhance your automated responses with Personalized Smart Replies, making them sound more authentic and personalized. This feature sifts through data on your device to offer pertinent information. Available in Gmail for subscribers this summer, it will eventually extend across all platforms.

Google once claimed that their triumph with Gemini included winning Pokémon Blue. Staff writer Zach McAuliffe has some doubts about this assertion.

you do NOT tamper with his childhood memories

.

Additionally, it features numerous improved models, enhanced coding tools, and various other developer-centric elements that one would anticipate at a developer conference. The announcement also covered its interactive feature called Gemini Live, which was previously known as part of

Project Astra

It’s an interactive, agency-driven, voice-enabled AI application with a comprehensive feature set. AsManagingEditorPatrickHolland explains, “Astra serves as a testing ground for features; once they’re refined enough, they transition into Gemini Live.” For researchers,

NotebookLM

incorporates Gemini Live to improve its… everything.

It’s available now in the US.

Chrome AI Mode

People (that is, those over 18) who pony up for the subscriptions, plus people on the Chrome Beta, Dev and Canary tracks, will be able to try out the company’s expanded

Gemini integration with Chrome

— summary, research, and agent-based chats drawn from the information displayed on your screen, similar to

Gemini Live

Does for phones (which, by the way, is offered for free on both Android and iOS systems as of now). However, the Chrome version better suits the kind of tasks you perform on a computer compared to a phone. (Microsoft already offers something similar.)

The Copilot within its dedicated Edge browser

.)

Ultimately, Google intends for Gemini within Chrome to support the ability to synthesize information across several tabs as well as enable voice-guided navigation.

The firm is likewise broadening the ways you can engage with its services.

AI Summaries in Google Search

as part of

AI Mode

, featuring interactions with AI overviews and enhanced assistance for a more autonomous shopping experience. This can be accessed via a new tab with a search function or directly through the search bar, and it’s ready to use right away. The feature offers more comprehensive searches along with Personal Context — utilizing all the data known about your preferences and behaviors — to provide tailored recommendations and personalized responses.

The firm outlined its latest plan

AI Mode for shopping

, which has an improved conversational shopping experience, a checkout that monitors for the best pricing, and an updated “try on” interface that lets you upload a photo of yourself rather than modeling it on a generic body.

We have reservations about the feature —

it sounds like a privacy nightmare

, for one thing, and I don’t really want to see clothes on the “real” me for another.

Google intends to release it shortly; however, the enhanced “try-on” functionality is currently accessible in the U.S. through

Search Labs

.

Google Beam

Formerly known as

Project Starline

Google Beam represents an enhanced iteration of the firm’s 3D videotelephony technology, incorporating artificial intelligence. This system utilizes a cluster of six cameras to record every perspective of you. The AI subsequently assembles these views, employs head-tracking to monitor your motions, and transmits them at a rate of up to 60 frames per second.

The platform employs a light field display that eliminates the need for any specialized gear; however, this technology can be prone to issues when viewed from angles outside of the optimal range. Given HP’s extensive experience in large-scale scanning ventures—such as 3D scanning—it comes as no surprise that they have joined forces with Google.

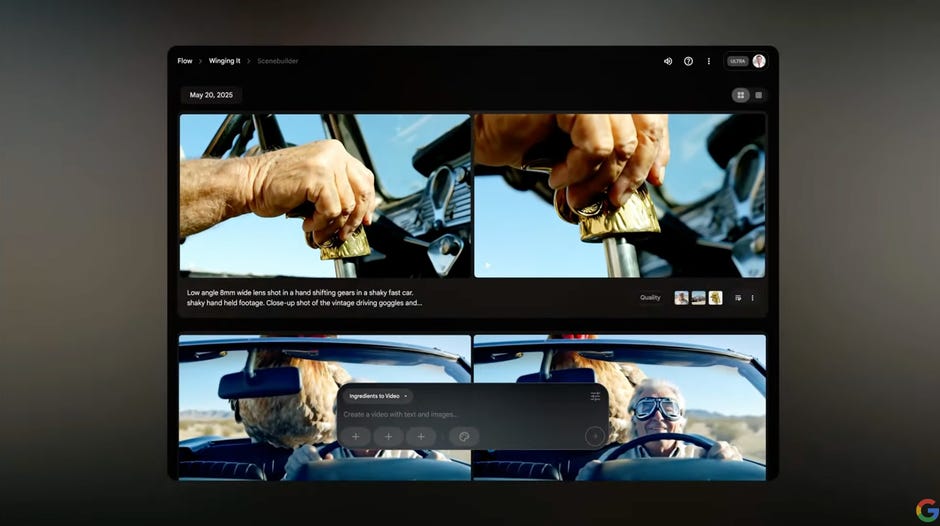

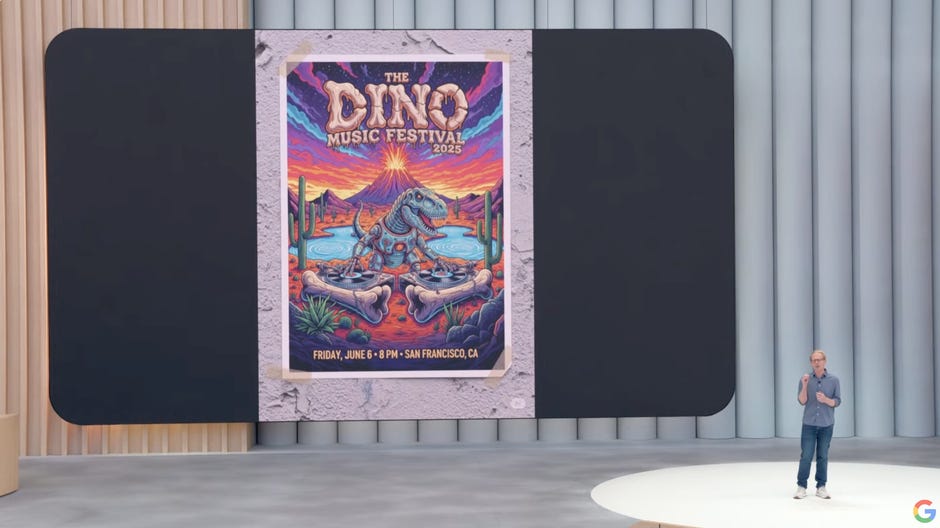

Stream and additional inventive creation tools

Google Flow

Is a novel utility that leverages Imagen 4 and Veo 3 capabilities to execute functions such as generating AI-driven video segments and seamlessly integrating these snippets into extended footage, all through one command while maintaining coherence across scenes. Additionally, it offers editing functionalities including camera adjustments. This tool can be accessed as part of the Gemini AI Ultra package.

Image 4 produces images that are more detailed, offering enhanced tonal quality and superior handling of text and typography. Additionally, it operates at a quicker speed. In contrast, Veo 3, which is also accessible now, demonstrates an advanced comprehension of physics and includes robust native audio generation capabilities—such as sound effects, ambient noises, and spoken dialogues.

Everything mentioned here can be accessed with the AI Pro plan. Additionally, Google’s Synth ID generative AI detection tool is accessible today.

Initially released on May 21, 2025 at 10:17 AM Pacific Time.